Dissertation Topic : Real-Time Multi-Bounce Global Illumination Using ReSTIR And Neural Upscaling

As I am now in my final year of the Computer Game Applications Development at Abertay University, it's time to tackle the dissertation. For my topic, I'm diving deep into a specific challenge in modern graphics: combining Reservoir-based Spatio-Temporal Importance Resampling (ReSTIR) with a probe-based solution like Dynamic Diffuse Global Illumination (DDGI) to achieve real-time, multi-bounce lighting

In the real world, light doesn't just hit a surface and stop. It bounces around everywhere! A red carpet will cast a subtle red glow on a white wall. Sunlight streaming through a window will bounce off the floor and light up the ceiling. This phenomenon of light bouncing around is called Global Illumination (GI), and it's the secret sauce that makes scenes look truly realistic and grounded.

For years, getting this effect in video games has been a huge headache. The most common method is "baking" the lighting. Artists would set up a scene, and then the computer would spend hours, sometimes even days, pre-calculating all those light bounces. The result looks great, but it's completely static. If a character walks through the scene or a light source moves, none of the bounced lighting can react. It's like a photograph, not a living world.

More recent techniques like Screen Space Global Illumination (SSGI) tried to do this in real-time, but they have a massive flaw: they only know about what's currently on your screen. If you turn the camera, the lighting can change drastically because the game loses information about what's now off-screen.

My project is all about fully dynamic, multi-bounce global illumination that runs in real-time. I want to build a system where light behaves just like it does in the real world, even in a fully interactive game environment.

My technical approach is to combine several key pieces of modern rendering technology:

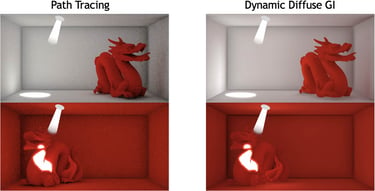

More Bounces: I'm starting with a technique from Nvidia called Dynamic Diffuse Global Illumination (DDGI). Think of it as placing a grid of tiny light-sensors throughout the scene. The problem is, it's traditionally a single-bounce solution. My first big task is to upgrade it to trace multiple bounces, allowing light to travel and bleed through a scene far more naturally.

ReSTIR: Just tracing more rays for every bounce is incredibly expensive. I'm implementing a technique called Reservoir-based Spatio-Temporal Importance Resampling (ReSTIR). In simple terms, ReSTIR is a algorithm that figures out which light paths are the most important. Instead of blindly shooting rays everywhere, it intelligently finds the best samples, reuses them for neighbouring pixels, and even carries that information over from previous frames. Essentially, it focuses the computational power where it matters most, which is key to getting a clean image quickly.

DLSS & Ray Reconstruction: Once the lighting is calculated, there's still some noise and performance cost to deal with. That's why the final phase of my project is to integrate two of NVIDIA’s amazing AI technologies. Ray Reconstruction is a powerful denoiser designed specifically for ray-traced graphics. Then, Deep Learning Super Sampling (DLSS) will render the scene at a lower resolution and use AI to brilliantly upscale it, giving a massive boost to frame rates without a noticeable loss in quality.

Of course, an idea is just an idea until you can prove it. I'll be running a ton of tests. I'll compare my final result against offline-rendered images from Blender Cycles (the "ground truth"). I'll also be measuring performance in milliseconds on a range of GPUs, from top-tier cards down to mid-range ones, to see how well the solution scales.

The goal is to prove that we can achieve lighting that's nearly indistinguishable from path-traced renders, all while maintaining the high frame rates that games demand.